Highlighted Competitions

Malaria Detection

Classifying malaria parasites in blood slide images to enable early-stage diagnosis

Mapping Kelp Forests

Helping researchers track the health of giant kelp forests by segmenting satellite imagery

Previous Competitions & Projects

Deforestation Drivers

Using satellite images to identify deforestation drivers to promote sustainable land use

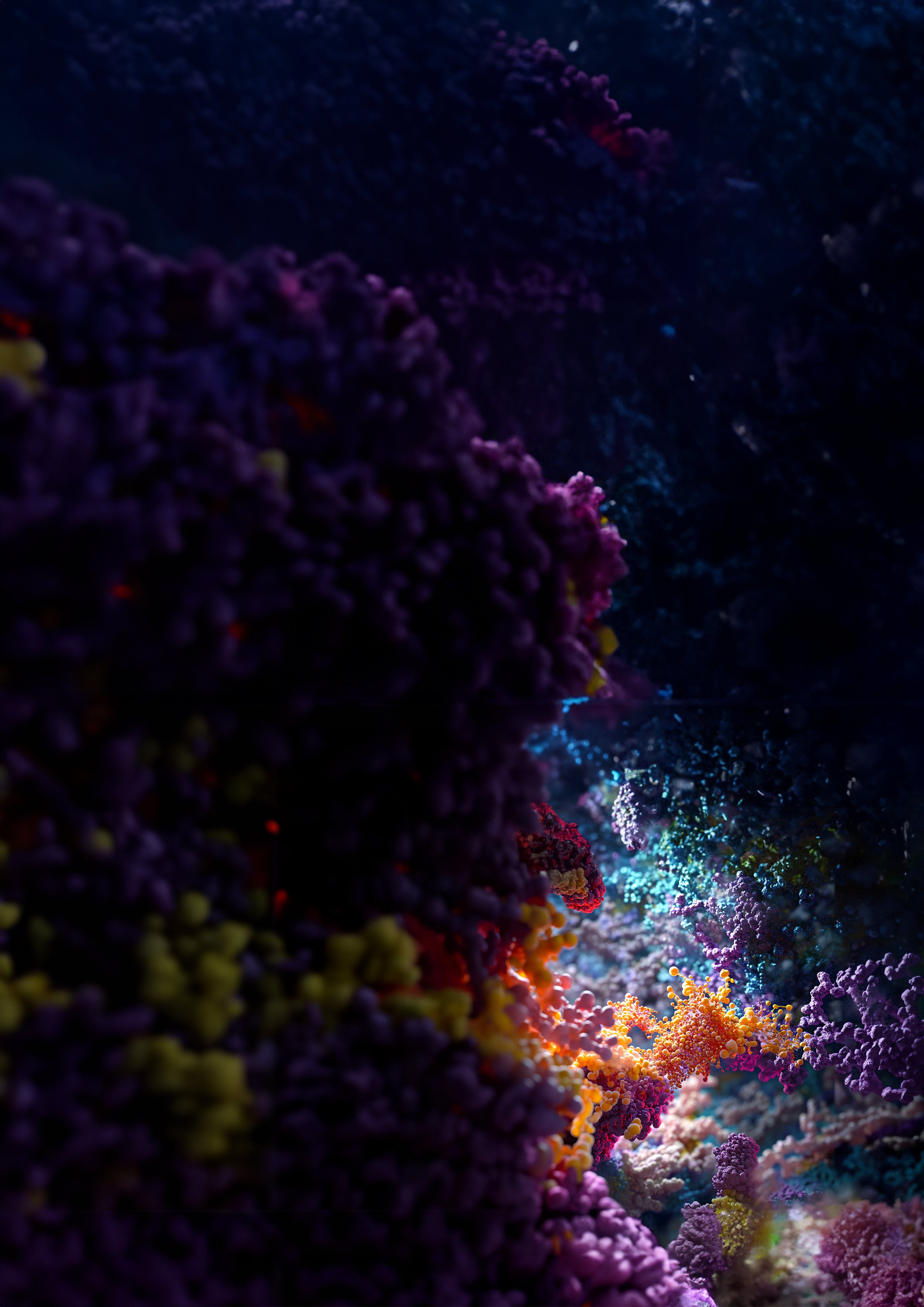

CryoET Object Identification

Annotating protein complexes in 3D cellular images to accelerate biomedical discoveries and disease treatment

Literacy Screening

Scoring audio clips from literacy screeners, helping educators provide effective early literacy intervention

Malaria Detection

Classifying malaria parasites in blood slide images to enable early-stage diagnosis

Deforestation Drivers

Using satellite images to identify deforestation drivers to promote sustainable land use

About the competition

Detecting the drivers of deforestation is important to help reduce its harmful effects on forests. Understanding these drivers enables the development of smarter conservation strategies and sustainable land management practices.

The goal of this competition is to train an AI model to identify and segment the causes of deforestation using satellite images. The participants will work with labeled data showing land-use changes, such as farming expansion, mining, and urban growth, in tropical areas like the Amazon and Southeast Asia. The AI models developed will help understand the changing factors behind deforestation in different regions, enabling the development of precise and effective conservation strategies for better forest protection.

Solafune

The competition is organized by Solafune, they develop satellite and geospatial data technology. They create tools for industries like agriculture, disaster response, natural resource management, finance, defense, marketing, and insurance, helping tackle critical social and business challenges.

In addition to their technology, Solafune hosts data science competitions to foster collaboration and innovation. Their mission is to analyze global events and drive meaningful change using satellite technology.

Relevance

Deforestation is a global crisis, causing biodiversity loss, climate change, and water cycle disruptions. Addressing its root causes is crucial for protecting ecosystems, supporting communities, and fighting climate change.

This competition challenges participants to develop an AI model to track deforestation more accurately. The insights gained from the AI model will help shape future conservation strategies, drive reforestation efforts, and promote sustainable development.

“Understanding the drivers of deforestation is key to protecting the planet’s most critical ecosystems.”

Technical details

For this competition 12-band data was obtained through the Sentinel-2 satellite. These bands included RGB-channels, but also information measured with larger wavelengths. The goal was to segment the data into four classes: Mining, Logging, Grassland/Shrubland and Plantation. The final prediction of the model converted predictions into polygons. The main challenge was building a model which would perform well considering the small training data set, consisting of only 176 annotated images.

Another grand challenge was the imbalances between classes. For example, the class logging did not appear in many training examples and usually had a very long and thin structure, unlike the classes plantation and grassland, which covered larger areas.

The main focuses were augmentations, model architectures and ensembling models. Multiple augmentations were tried to help the model generalize well. Different model architectures, such as UNet and Feature Pyramid Networks were used as they performed well on different classes. Lastly, we implemented multiple different ways of assembling the models, such as averaging their predictions, using certain models for certain classes and building a Ridge Regression model on top of our existing predictions.

UN Sustainable Development Goals

This competition contributes to the UN Sustainable Development Goal 13: Climate Action and Goal 15: Life on Land. By identifying the key drivers of deforestation, we are advancing efforts to protect, restore, and promote the sustainable use of terrestrial ecosystems.

Competition Page

Status: Finished

CryoET Object Identification

Annotating protein complexes in 3D cellular images to accelerate biomedical discoveries and disease treatment

About the competition

Protein complexes are essential for cell function, and understanding their interactions can advance health and disease treatments. Cryo-electron tomography (cryoET) generates 3D images of proteins in their natural environments, at near-atomic detail, offering detailed insights into cellular function.

However, standardized cryoET tomograms remain underexplored due to the difficulty of automating protein identification. Manual annotation is time-consuming and limited by human capabilities, but automating this process could reveal cellular "dark matter", leading to discoveries to improve human health.

This competition aims to train an AI model to automatically annotate five types of protein complexes using a real-world cryoET dataset, accelerating discoveries and unlocking the mysteries of the cell.

CZ Imaging Institute

The competition is organized by the “Chan Zuckerberg Imaging Institute”. They develop advanced imaging technologies, enabling new insights into health and disease. By creating cutting-edge tools and biological probes, it empowers researchers to visualize cellular structures with unmatched resolution.

Focused on collaboration, the institute drives innovation through open-source tools, groundbreaking research, and accessible imaging systems, advancing biology and biomedicine.

Relevance

Understanding protein complexes is crucial for addressing global health challenges. Diseases like cancer, neurodegenerative disorders, and infections are rooted in the interactions of these cellular components. CryoET imaging provides new insights, but without automated annotation tools, these images remain largely underexplored.

This competition directly supports advancements in healthcare by developing AI models that make cryoET data more accessible and interpretable. These tools will empower scientists to identify molecular targets, design effective treatments, and improve patient outcomes on a global scale.

“Revealing the hidden complexities of the cell to drive breakthroughs in health and disease”

Technical details

The dataset for this competition consists of a cube containing 3D protein samples. The goal is to develop a multiclass localization model capable of identifying the center of any protein sample present in the data.

One of the key challenges lies in the extremely low signal-to-noise ratio (SNR). This is due to the nature of electron tomography, the technique used to sample the proteins. During this process, a high density of electrons cannot be used, as it would damage the delicate protein samples. Navigating around the low SNR while maintaining accuracy remains a significant hurdle.

The team is currently focused on researching and implementing segmentation models, which are later converted into localization coordinates during post-processing. Both YOLO and U-Net models have proven to be the most effective for this use case.

UN Sustainable Development Goals

This competition supports UN Sustainable Development Goal #3: Good Health and Well-Being. The AI model aims to enhance understanding of cellular processes, enabling earlier and more accurate diagnostics and advancing biomedical science.